- Google is developing LaMDA technology that could lead to more natural Assistant conversations.

- It responds with logical, consistent answers rather than raw data.

- The technology is still quite early.

Google Assistant can usually only answer questions with raw facts at best, or a confused “sorry, I don’t understand” at worst. Soon, however, it might be almost as elegant as talking to another person. Google has used its I/O conference to introduce a LaMDA project (Language Model for Dialogue Applications) that promises more natural conversations with AI helpers.

Unlike with typical AI language models, Google trained LaMDA on dialogue to help it catch and participate in the subtleties of open-ended conversation. It can respond to the context of a given chat in a way that makes sense without simply spitting out facts. An AI could participate in a meandering conversation that leaps from a TV show to its filming location and local food, for instance.

See also: Google Assistant commands you need to know

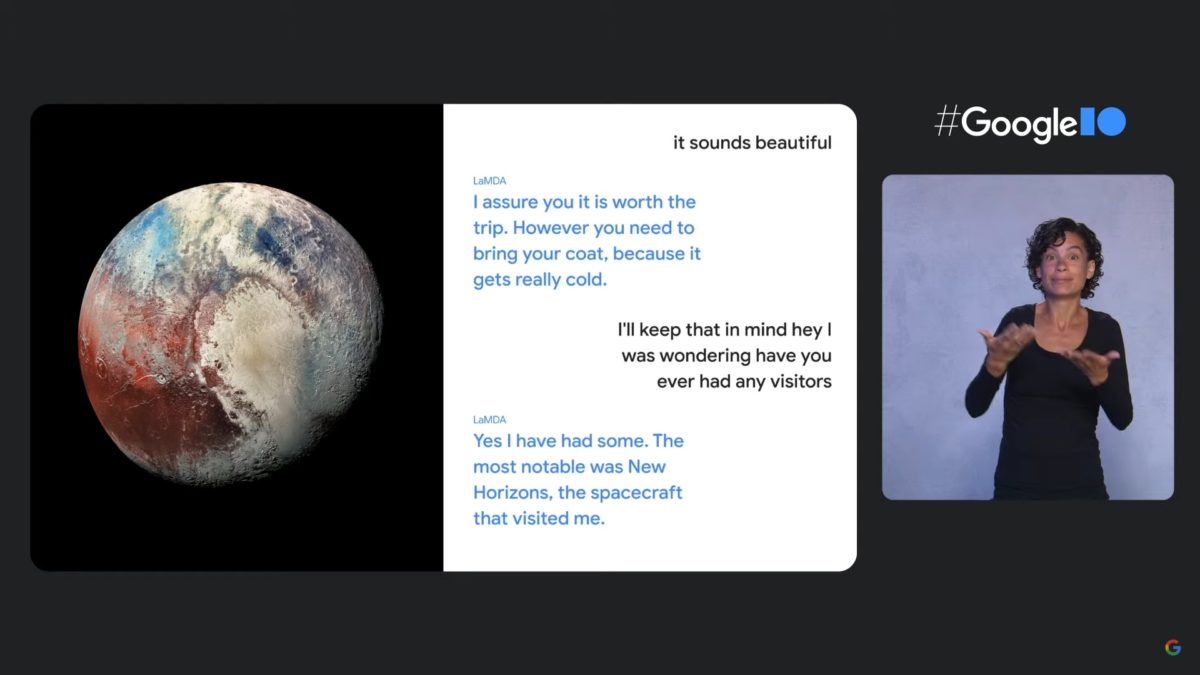

Google used its I/O presentation to show additional examples of LaMDA functionality. You could hold conversations with the dwarf planet Pluto or a paper aircraft, learning more about them as you move along.

LaMDA is still “early,” Google stressed. Among other things, the company wanted to ensure that its technology provided both witty answers and stuck to accurate claims. It also wanted to avoid an unfair bias. Still, it’s easy to see the potential for Assistant — you could get more human-like answers to your questions, and even participate in back-and-forth discussions.

from Android Authority https://ift.tt/3hB9kd2